For most of the 20th century, automobiles didn't really include any electronic components, and everything from ignitions to carburetors were controlled mechanically. But with emissions controls and gas economy increasingly mandated by governments across the globe beginning in the 1970s, the impetus for more sophisticated solutions emerged.

Source | smoothgroover22/Flickr

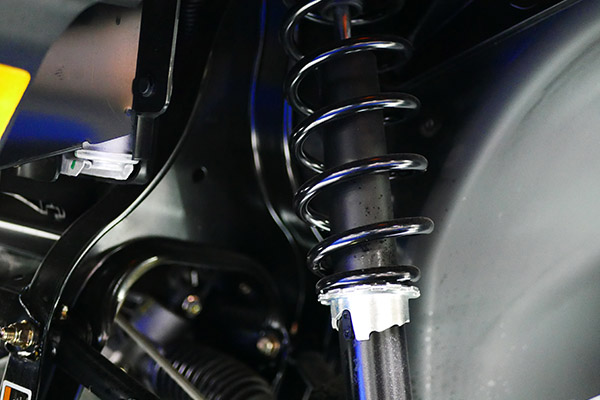

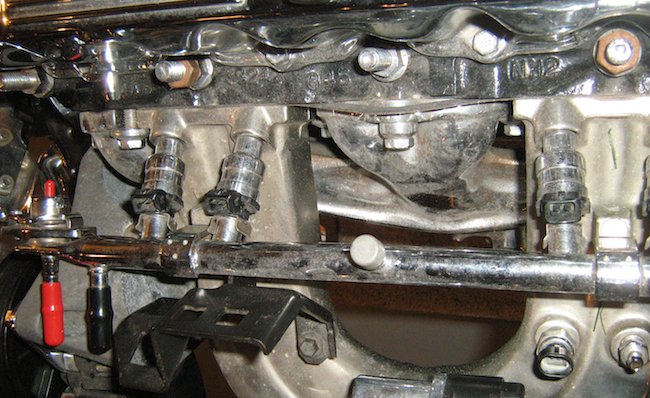

The first computers started making their way into cars in the late 1960s and early 1970s, mainly in the form of circuit boards and pre-programmed microchips that did very specific things, such as regulate fuel injection systems, ignition timing and spark, and emissions. Bosch's first computer-controlled electronic fuel injection system made its debut in the 1968 Volkswagen Type 3, but it wasn't until the 1980s that microchips and microprocessors became small enough to fit into cars. Of course, today's cars are as much computer as engine—no surprise given the growth of electric and hybrid vehicles, and the inevitable march toward autonomous cars that rely on AI, machine learning, and the smooth operation of multiple other technologies to function.

Like many automotive innovations, the development of computers in cars was the result of teamwork by many companies, universities, and researchers, and it's hard to give specific credit to individuals for many of the biggest milestones, but these four people, among many others, are notable for helping nudge along the evolution of computing in cars.

Gary Boone

With new fuel efficiency and emissions controls mandated by the EPA in the 1970s, automakers looked to the semiconductor industry to help manage the powertrain more effectively.

Gary Boone, a computer engineer at Texas Instruments, invented the microcontroller. This single chip contained all the circuits needed in a calculator (minus the display and keypad), a precursor to the modern personal computer that contained on-board preprogrammed software to control everything from keyboards and televisions to VCRs and automatic ignition systems.

After Texas Instruments and a stint at LED manufacturer Litronix, Boone joined Ford to help implement more electronics in its cars, an inevitable byproduct of emissions and fuel-economy mandates. “The compelling force that launched electronics into the powertrain, into the core of automotive engineering design, was the government," Boone said in a 1996 interview, but emissions and fuel economy could not forsake performance, either. “You [had] this three-way contest for how the powertrain shall perform, trying to minimize emissions, maximize fuel economy, and at least retain if not improve performance. It was horrible. The mechanical solutions were having greater and greater difficulty meeting the mandates." Enter microprocessors, which were increasingly able to tackle data coming from more and more sensors and translate that into appropriate regulation of spark and fuel of the powertrain. Boone was able to bridge the cultures, setting at ease the mechanical engineers wary of electronics emissions controls and electronics control modules in cars, while also schooling high-tech companies such as Intel and Motorola on the need for microchips that could withstand car-specific challenges such as wide temperature changes and straight-up reliability.

Stefan Savage

In the wildly enthusiastic run-up to an autonomous car future, computer security is often forgotten. But thanks to this professor of computer science and engineering at the University of California, San Diego, computerized cars may just have a chance at being a little safer and less vulnerable to the same cybersecurity threats bedeviling seemingly everything else that's networked. Savage and his team of researchers realized that the increased connectivity of cars made them vulnerable to bad actors. In 2010, they were the first to show that it was possible to remotely hack into a car. They did this by literally thinking as hackers do, and were eventually able to take over the engine, brake systems, and windshield wiper systems, as well as eavesdrop on in-cabin conversations. “Modern cars are basically distributed computers that happen to have wheels on them, “ Savage told the Los Angeles Times. “These systems work better and more efficiently than they did before, but they also have a lot of fragility against someone who educates themselves about the details of how they work and is interested in disrupting them.“

Savage continues to work directly with auto manufacturers to help them address vulnerabilities, and, as a result of his efforts, auto manufacturers are taking cybersecurity in vehicles seriously, which has led to recalls and the ability to update on-board software over the air, much as computers and smartphones do, to keep cars more secure. Savage has been the recipient of numerous awards and was granted a 2017 fellowship by the MacArthur Foundation (also known as a MacArthur “genius" award).

Source | Mobileye

Amnon Shashua

The AI that goes into semi- and fully autonomous cars is the result of many technologies, teams, and innovators, but one Israeli technologist, in particular, has been at it for a while. A graduate of math and computer science from Tel Aviv University, the Weizmann Institute, and MIT, Amnon Shashua first developed the idea of putting cameras in cars as far back as the 1980s, but it wasn't until 1999 that he teamed up with Ziv Aviram to found Mobileye, one of the foremost developers of computer vision and safety systems for cars.

The company uses a mix of cameras, computer vision, machine learning, and AI to power collision warnings, adaptive cruise control, lane departure, and blind spot warnings, and other semi- and fully autonomous features of 27 different auto manufacturers. In 2017, Intel bought the company for more than $15.3 billion, putting it instantly at the forefront of autonomous car technology, a market that the company believes will be worth $70 billion by 2030. Aviram left the company not long after the acquisition, but Shashua remains to lead it into the next decade. At the most recent CES, the Intel subsidiary announced it would install 15 autonomous car projects across 14 manufacturers, with four of these using its new EyeQ4 system on a chip (SoC), an all-in-one integrated circuit that can handle computationally complex and intense computer-vision tasks without using too much power.

Dean Pomerleau

Many of today's cars use neural networks that can be trained to recognize people, animals, lane markings, street signs, and other obstacles and realities of the road. This may seem like a new concept, but the first use of a neural network in a car dates back to the 1980s, when Carnegie Mellon researcher Dean Pomerleau put one into ALVINN (Autonomous Land Vehicle in a Neural Network), a computer-controlled vehicle that had a camera and a massive GPU as big as a refrigerator with the processing power of about one-tenth of an Apple Watch. It ran at 3.5 miles per hour but eventually was able to get to 70 miles per hour.

Its biggest innovation was that by using a neural network, which is a machine-learning model, ALVINN could actually learn to steer by observing a human driver's moves in multiple environments, even at night (by using additional tools such as laser reflectance imaging and a laser rangefinder). While still slow and unscalable, it serves as a precursor to the autonomous car of the future, which will increasingly make use of on-board neural networks and edge computing to tackle real world scenarios no matter the weather, lighting, or other visual variables.